From arstechnica.com:

It’s not unusual to hear that a particular military technology has found its way into other applications, which then revolutionized our lives. From the imaging sensors that were refined to fly on spy satellites to advanced aerodynamics used on every modern jetliner, many of these ideas initially sounded like bad science fiction.

So did this one.

Consider the following scenario:

To defend the United States and Canada, a massive array of interconnected radars would be set up across the two nations. Connected by high-speed links to a distributed network of computers and radar scopes, Air Force personnel scan the skies for unexpected activity. One day, an unidentified aircraft is discovered, flying over the Arctic and heading toward the United States. A quick check of all known commercial flights rules out a planeload of holiday travelers lost over the Northern Canadian tundra. At headquarters, the flight is designated as a bogey, as all attempts to contact it have failed. A routine and usually uneventful intercept will therefore fly alongside to identify the aircraft and record registration information.

Before the intercept can be completed, more aircraft appear over the Arctic; an attack is originating from Russia. Readiness is raised to DEFCON 2, one step below that of nuclear war. Controllers across the country begin to get a high-level picture of the attack, which is projected on a large screen for senior military leaders. At a console, the intercept director clicks a few icons on his screen, assigning a fighter to its target. All the essential information is radioed directly to the aircraft’s computer, without talking to the pilot.

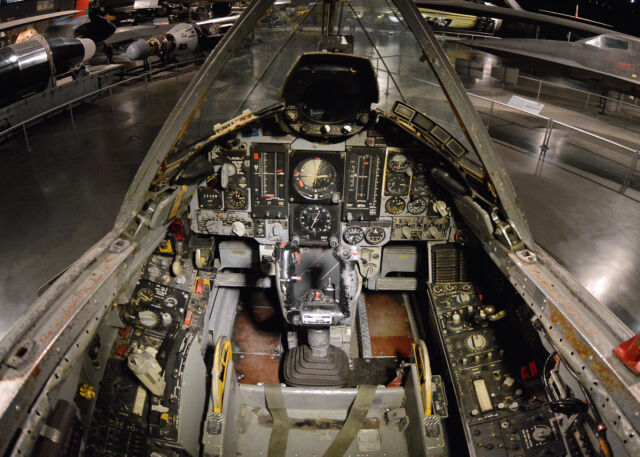

By the time the pilot is buckled into his seat and taxiing to the runway, all the data needed to destroy the intruder is loaded onboard. A callout of “Dolly Sweet” from the pilot acknowledges that the data load is good. Lifting off the runway and raising the gear, a flip of a switch in the cockpit turns the flight over to the computers on the ground and the radar controllers watching the bogey. A large screen in the cockpit provides a map of the area and supplies key situational awareness of the target.

The entire intercept is flown hands-off, with the pilot only adjusting the throttle. The aircraft, updated with the latest data from ground controllers, adjusts its course to intercept the enemy bomber. Only when the target is within the fighter’s radar range does the pilot assume control—then selects a weapon and fires. After a quick evasive maneuver, control returns to the autopilot, which flies the fighter back to base.

This isn’t an excerpt from a dystopian graphic novel or a cut-and-paste from a current aerospace magazine. In truth, it’s all ancient history. The system described above was called SAGE—and it was implemented in 1958.

SAGE, the Semi-Automatic Ground Environment, was the solution to the problem of defending North America from Soviet bombers during the Cold War. Air defense was largely ignored after World War II, as post-war demilitarization gave way to the explosion of the consumer economy. The test of the first Soviet atomic bomb changed that sense of complacency, and the US felt a new urgency to implement a centralized defense strategy. The expected attack scenario was waves of fast-moving bombers, but in the early 1950’s, air defense was regionally fragmented and lacked a central coordinating authority. Countless studies tried to come up with a solution, but the technology of the time simply wasn’t able to meet expectations.

Whirlwind I

In the waning days of World War II, MIT researchers tried to design a facility for the Navy that would simulate an arbitrary aircraft design in order to study its handling characteristics. Originally conceived as an analog computer, the approach was abandoned when it became clear that the device would not be fast or accurate enough for such a range of simulations.

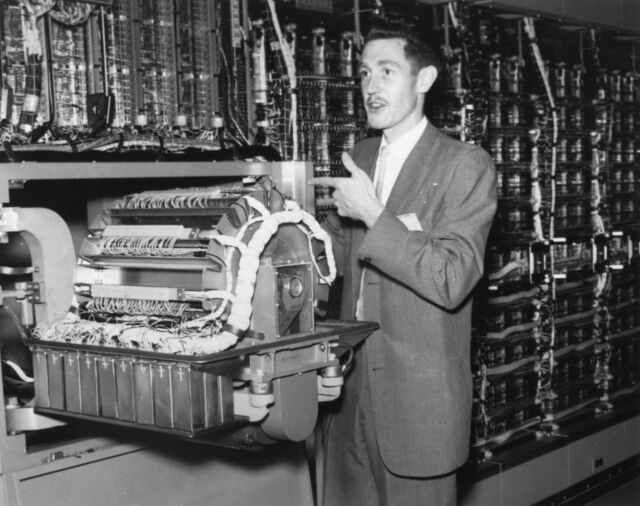

Attention then turned to Whirlwind I, a sophisticated digital system at MIT, with a 32-bit word length, 16 “math units,” and 2,048 words of memory made from mercury delay lines. Importantly, Whirlwind I had a sophisticated I/O system; it introduced the concept of cycle stealing during I/O operations, where the CPU is halted during data transfer.

After a few years, the Navy lost interest in the project due to its high cost, but the Air Force evaluated the system for air defense. After modifying several radars in the Northeast United States to send digital coordinates of targets they were tracking, Whirlwind I proved that coordinating intercepts of bombers was practical. Key to this practicality were high-reliability vacuum tubes and the development of the first core memory. These two advances reduced the machine’s otherwise considerable downtime and increased processing soon made Whirlwind I four times faster than the original design.

SAGE architecture

Buoyed by the promising results, MIT, IBM, and the Air Force conceived what would become SAGE. North America would be divided into 23 sectors, each with its own “direction center,” several of which would feed into a “Combat Direction Central” system. Each direction center would be connected to a number of radars and have several fighter squadrons available to launch interceptors. If a direction center was destroyed in an attack, redundant communications links would be rerouted to a surviving center that would continue the air defense effort. To simplify the problem of redundancy, all centers used a technique called “cross-telling,” a synchronization protocol that exchanged data on tracking, aircraft, and bogeys.

Creating such a system was a massive undertaking. Stoked by Cold War fears of a nuclear attack, the Air Force developed an air defense architecture that was far beyond the current state of the art. Much of the size and complexity came from the requirement to defend the United States and Canada, an area nearly the size of the Soviet Union itself. The resulting effort became far larger than the Manhattan Project of World War II.

SAGE was the name of the overall system, which included not only the centers that housed the computers but the architecture of processing, the interceptors, the radars, and the ground-to-air missiles. The main computer itself, known as the AN/FSQ-7 in military parlance, was (and still is) the largest computer ever built. Consisting of two processors, one always active and the other operating in a standby mode, the AN/FSQ-7 required 49,000 vacuum tubes and 68K of 32-bit core memory. It operated at about 75,000 instructions per second. As moving-head disk drives were only just coming into commercial use, drum memory was used for permanent storage. Each of the 26 drums held about 150K, had an access time of 20 milliseconds, and was shared between processors and displays. Since any computer is blind and deaf without data flowing in and out, the processors also had a sophisticated I/O system connecting radars, displays, and other direction centers. Critically, the AN/FSQ-7 was a true real-time system, unlike the commercial batch-oriented system that came before and for many years afterward.

This required a huge physical plant. Each of the 23 centers was housed in four-story blockhouses. (These were not hardened against nuclear blasts, but the two-meter thick walls offered significant protection from potential attacks.) One 2,000-square-meter floor was dedicated to the 250-ton processors and their support electronics. Diesel generators supplied the 3 MW of power needed to keep each complex running.

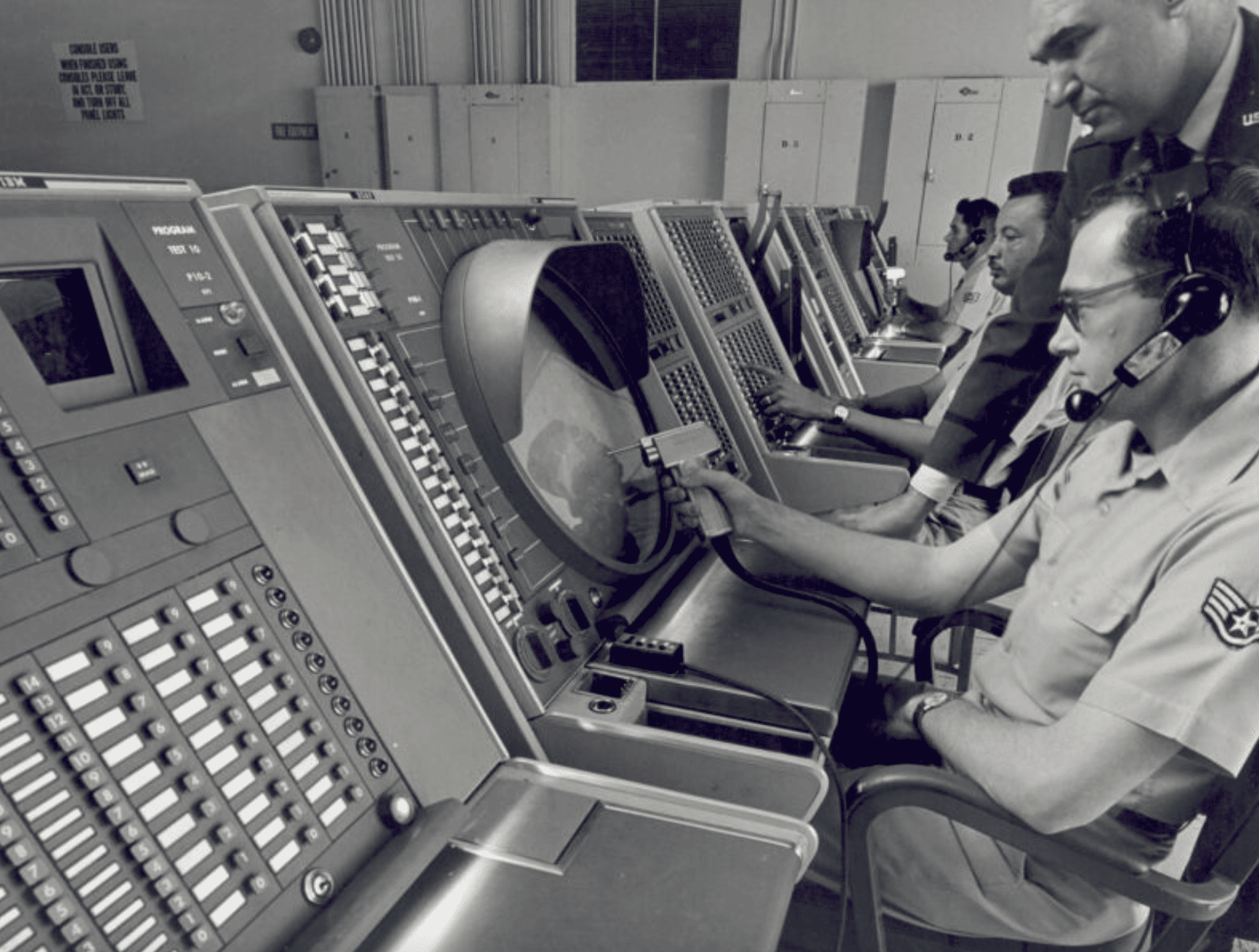

About 90 consoles were manned in each direction center, each tasked with a different part of the air defense problem. One group was for general air surveillance. Similar to what might be found in a modern air traffic control center, this group tracked all flights in a particular sector. If a controller confirmed an aircraft as an unknown, the data was forwarded to the weapons director. After evaluating the threat, the weapons director could order intercept missions or send targeting information to missile batteries around major cities.

The intercept director accepted the handoff from the weapons director and assigned individual aircraft to particular targets. By clicking on the fighter and the bogey, an optimal intercept course and altitude were calculated and radioed to the fighter. If radar detected a change in the target’s course, the system would automatically recalculate the intercept and send updated data to the fighter. No verbal communication with the pilot was necessary.

Pointing and clicking before “point and click”

Selecting a specific radar track on a screen full of blips would be completely impractical without some form of “point and click” interface for identifying a target. With the invention of the computer mouse still a decade away, Whirlwind engineers developed the “Light Gun,” a pistol-shaped pointing device that allowed controllers to select a target on the screen. Once the target was selected, the operator could assign a track identifier, order an intercept, or select a target for a ground-to-air missile.

The computer itself did not drive the displays directly. As the position and height of an aircraft were fed from radars into the processor, the tracking data was computed and written onto drums. Each console read from these drums, extracting only the data it was responsible for. The radar operator display was mostly decoupled from the processor, and its image was generated locally. A bank of switches on the console changed the display or changed the focus to a particular aircraft. With the processor freed from the duties of managing a large number of consoles, its limited horsepower was available to process incoming radar data.

Data-sharing on drums was a key part of the “cross-tell” feature. One of the two processors was always on standby and had to be ready to assume control in the event of a primary system crash. The primary processor was always updating the cross-telling drum, which the standby system would read if brought online. The standby system wasn’t always updating itself, as it was often preoccupied with maintenance or training exercises. With continual maintenance, the reliability of SAGE was impressive, especially for its era. On average, it experienced only about four hours of unplanned downtime per year.

The first part of identifying friend from foe was knowing who was expected to be in the air. SAGE kept detailed records on all flight plans filed, and a controller could check a track against the list of flights. While this seems commonplace today, most airlines didn’t have computerized flight planning at the time. All information was inputted by hand onto the SAGE storage drums using punch cards.

The pointy end of the spear

SAGE was the ground-based component of air defense, and it required an equally capable fighter to intercept the bombers. Early plans were to use the popular but marginally performing F-102 as the frontline aircraft. After extensive redesigns of the F-102, Convair delivered a reimagined aircraft in the F-106, introducing it as the “Ultimate Interceptor.” The moniker was hardly hyperbole. Finely tuned aerodynamics exploited the latest NACA area rule concepts that gave the F-106 its distinctive “coke bottle” fuselage and exceptional performance. It remains the holder of the world speed record—Mach 2.3—for a single-engine aircraft. Its low wing loading and large engine made it very maneuverable, especially at high altitudes.

The F-106 was equipped with electronics that were far beyond the capabilities of any other aircraft of its day. The heart of the aircraft was the Hughes MA-1 system, which integrated all navigation, radar, communications, and autopilot functions in a 2,500 pound (1,140 kg) collection of boxes at the front of the aircraft. At the center of the MA-1 was the Hughes Digitair, the first digital airborne computer, an 18-bit, one’s-complement vacuum tube system with 2K words of core memory. Impressively, mission data was recorded to onboard drum storage, which could hold 13,000 words. All intercept data was stored on the drum, as was target information and radio and navigation data.

With such an automated system, the pilot needed a way to maintain situational awareness of the intercept. A large screen in the cockpit displayed a map of the area, projecting the positions and tracks of the fighter and its target in real time. This screen, known as the Tactical Situation Display, was updated with data from the direction center, giving the pilot “the big picture” of the attack, an essential feature when voice communication was not possible.

Operation Sky Shield

The nationwide two-day air-traffic shutdown after September 11, 2001, was unprecedented, but it was hardly the first time the FAA grounded all commercial traffic in the United States. Starting in 1960, three annual exercises called Sky Shield had the Air Force work with the FAA to ground all commercial and private flights for several hours during the drill. These international exercises were intended to test the capabilities of SAGE. Large groups of bombers were assigned targets to “attack” in the United States, with the SAGE units controlling the response with fighters and ground-to-air missiles.

The UK’s Royal Air Force, flying their new Vulcan bombers, got orders for the exercise, but the plucky Brits ignored some of the details. Flying their own attack profiles (essentially cheating) and using highly effective radar jammers, the Brits exposed wide gaps in SAGE capabilities. Despite a generally high success rate claimed for the fighters in “destroying” their targets, the best estimates were that only a quarter of the bombers were intercepted.

IBM’s benefits from SAGE

IBM had recently entered the computing realm in the early 1950s, and it was already dominant in punch-card tabulating. With its emphasis on research and development and customer support, IBM was chosen by the Air Force in 1953 to design and construct the AN/FSQ-7 systems. While the project contributed about 10 percent to IBM’s bottom line for several years, the real benefit to IBM was access to the advanced designs at MIT and to revolutionary technologies such as core memory. As the SAGE project wound down, IBM engineers used their accumulated skills and applied them to the newer commercial offerings for years afterward.

While flying on airlines today has its own unique set of hassles, actually booking a flight is (relatively) painless. This wasn’t so in the 1950s, when schedulers went through racks of index cards, each with a particular flight’s info, all stored in what resembled a library card catalog. Only a few schedulers could fit around the card catalogs, and making a flight reservation could take an hour or two. Through a chance encounter, an IBM executive met the president of American Airlines, and they discussed how the airline needs paralleled the capabilities of SAGE. Recognizing the competitive advantages of a computerized reservation system, American contracted with IBM to develop SABRE. SABRE quickly became a huge success and through multiple corporate reorganizations now operates now as Travelocity and Expedia.

Disclaimer: We at Prepare for Change (PFC) bring you information that is not offered by the mainstream news, and therefore may seem controversial. The opinions, views, statements, and/or information we present are not necessarily promoted, endorsed, espoused, or agreed to by Prepare for Change, its leadership Council, members, those who work with PFC, or those who read its content. However, they are hopefully provocative. Please use discernment! Use logical thinking, your own intuition and your own connection with Source, Spirit and Natural Laws to help you determine what is true and what is not. By sharing information and seeding dialogue, it is our goal to raise consciousness and awareness of higher truths to free us from enslavement of the matrix in this material realm.

EN

EN FR

FR